The Actual Risks of Generative AI

We learned from our Meeting of the Minds of top AI experts that the risks we do face seem solvable over time & we can responsibly move forward

Let’s start with fire, the first great general purpose super-tool harnessed by humans. Take yourself back to that original campfire where the tribe is discussing the pros and cons of this amazing new discovery.

“Damn, this fire does wonders for meat. Sure beats chewing on raw flesh for hours. And you gotta love the heat this fire gives off to keep us warm at night. Then there’s the bonus that this fire thing scares off all those wolves that keep messing with us.”

“Yeah, but I burned my hand grabbing that log. And what happens if that fire spreads to our thatch hut and burns that down? I think we should douse the flames.”

Needless to say, the naysayer lost out in the ensuing vote, the tribe figured out how to deal with fire risks, and humans moved on to a series of other general purpose technologies that gave them more super-powers.

Like electricity, whose arrival in the late 19th century put humans through the same pros and cons drill.

“On the plus side, this electricity does light up our homes, and then lights up the streets, which is pretty awesome. A lot better than hanging out in the dark. And then this same electricity can power these things called radios that let us listen to music from afar. You gotta like that.”

“Yeah, but think of the workers who have to string up those power lines - some of them might get electrocuted. And someday some kid is going to stick a fork into an electric socket in his home and die. I say we ban it.”

Needless to say, the electricity critic was overruled and the world built out a relatively safe infrastructure to carry electricity, complete with building codes, safety inspections, and plastic plugs to cover electric sockets when you have kids.

Then we have the most recent example of the arrival of the internet in the late 20th century.

The pro camp pointed out the many advantages of connecting up everyone in the world: search all the world’s information in a second, talk over video with someone on the other side of the planet, stay in touch with myriad friends and family, work from anywhere.

The con camp warned that the internet would be used to spread porn, or commit crimes, or spread false information.

And yet we humans forged ahead with connecting up more than 60 percent of all humans on the planet, and are well on our way to getting nearly 100 percent on by the end of this decade. And we have figured out many ways to deal with the downsides and are still fine-tuning better ways forward today.

Why? Because the advantages of the internet - like electricity and fire - simply overwhelm the risks. And the risks for all these general purpose technologies can be, and always have been, mitigated over time.

The arrival of Generative AI has thrust humans into our next step change in capabilities through the introduction of our next general purpose technology in the form of Artificial Intelligence. And right on cue societies around the world have erupted into charged conversations about the pros and cons of this amazing new technology.

The pros about the positive potential of Generative AI are truly amazing. This nascent technology could, in the next few years, give almost all workers a high-functioning personal assistant that could double their productivity and add up to higher economic growth rates around the world. Soon, all students could have a dedicated personal tutor that could greatly improve their understanding of material — and test scores. The healthcare system could get reinvented around personalized medicine, which could also drive down costs. And the list of previously unimaginable possibilities just keeps going on and on.

In June, I hosted an event on The Positive Possibilities of Generative AI that brought together about 250 informed innovators to ground zero in San Francisco (with a lot of help from our partners and sponsors). A dozen remarkable experts in the AI space gave short talks that explained those many pros. (Read about all of them in the link above.)

But the media has been filled with reports of all the disasters that Generative AI could bring as well. The jobs for humans will vanish, and we’ll have mass unemployment. The internet will be filled with misinformation generated by bots, and our elections will be compromised. This powerful tool, in the hands of bad actors, could lead to human extinction. The intelligent machines themselves will improve so much that they will become our overlords.

To my mind, some of these worries seemed overblown. The media coverage has skewed towards the negative, underplaying or even avoiding all the positive possibilities. But that said, it seems prudent at this juncture to keep an open mind and seriously examine the claims of both sides, the pros and cons.

So, in July, I hosted an event on The Actual Risks of Generative AI, that brought together another 250 innovators who were more focused on the problems that this new technology could create (again with the help of our partners and sponsors identified at the end of this piece). We had a dozen of the most remarkable of them give their thoughts in short 5-minute talks. And we ended by voting on which of a dozen risks we should prioritize in terms of importance and urgency.

This essay will lay out what we learned over the course of the night. I want to start by pointing out the meta-insight that seemed to emerge overall: There are plenty of risks with Generative AI, but these risks can be managed and almost certainly will be. We must act responsibly, but we must move forward.

We’ve been through this drill before, with fire, with electricity, with the internet, and now with artificial intelligence, possibly the most remarkable super-tool of them all.

Facing down the possibilities of human extinction

Jerry Kaplan has been around in this Artificial Intelligence world for a long time. In 1981 Kaplan cofounded one of the first AI companies in Silicon Valley called Teknowledge, which had an IPO and was publicly traded. (He is also credited with inventing the first tablet computer decades before the iPad.) Kaplan is now about to publish his most recent book: Generative AI: Everything You Need to Know. But even a seasoned technologist like him has been blown away by recent developments in Gen AI.

“I've never been a big booster about AI, but I think we need to face something here, admit something which is that Generative AI, GAI, is artificial general intelligence,” Kaplan said. “We are there. We have achieved this, or we're about to cross this boundary.”

Kaplan said in his new book he looks at various major inventions in history like the wheel, the printing press, photography, airplanes, computers, and the internet. And he tried to assess their relative impact on human societies. The idea that intelligent machines are now about to be hooked up via the internet to act in the world and use other tools in a wide variety of general applications once reserved as the sole purview of humans - puts Gen AI in a different league.

“The truth is, Generative AI is going to be much more important than any of these inventions, I'm quite convinced of that. It might be the most important human invention ever,” Kaplan said. “I'm genuinely, seriously grateful to have lived to see this moment. I had never expected to see this in my life.”

So the stakes are high when it comes to Gen AI. We don’t want to shut down what is potentially the most important invention ever. We want to be careful about how much we constrain what we can try to do with it, or slow down its development too much at this early stage of great promise.

So then we need to start looking at what are the actual risks that must be dealt with as we move forward. And part of that process is setting aside the risks that are ridiculous or blown out of proportion or that are such a distant threat that they can be set aside for now, or possibly for decades before they possibly get real.

Kaplan thinks the fear of artificial intelligence getting so powerful that it becomes a super intelligence beyond human understanding and human control is “nonsense.” He said we should not fear the “singularity” when AI becomes our overlords or considers wiping us all out.

“They are not coming for us. There is no They. That's not what this is about. We're building tools,” Kaplan said. “These systems have no desires and no intentions of their own. They're not interested in taking over. They never will be.”

Before you get too comfortable with that reassurance that intelligent machines themselves won’t want to get rid of us, you should hear out another of our speakers, the machine translation pioneer De Kai. He is a professor in Artificial Intelligence both at U.C. Berkeley’s International Computer Science Institute and The Hong Kong University of Science and Technology. He was one of the eight inaugural members of Google’s AI Ethics Council before the pandemic too.

De Kai is very worried that these powerful tools in the hands of bad actors could cause terrible harm if not even extinction. Think about the mentality of someone who brings an AR-15 into a school and kills multiple children. As tragic as those massacres are, they are relatively limited in their potential for destruction. That same person in the future might harness AI to dramatically extend their reach.

“Until now, humanity has managed to survive Weapons of Mass Destruction only because they've been limited to a handful of highly-resourced nation states, De Kai said. “But now we're perilously close to the days when AI's enabling not just arsenals of lethal autonomous weapons, but also criminals, terrorists, rebels, terrorists, even disgruntled individuals who can practically head down to the Walmart or Home Depot, and pick up everything they need.”

“World War I was triggered by the assassination of a single archduke. Would you take a bet that not a single person is going to push their launch button? It's a terrible bet,” he said. “The question is, what would cause someone to push that launch button? Well, loss of hope, overpowering fear, irreconcilable polarization.”

So security issues around bad actors leveraging these same tools that could transform the world for the better seems like a reasonable worry that should be prioritized to the front of the line. And the 250 innovators invited to our gathering from many prominent networks in the tech and innovation economy of the San Francisco Bay Area seemed to agree. In a rough show of hands at the end of the evening security risks were one of the top three concerns.

Another of those top three concerns from those gathered was the spread of misinformation that could be amplified by Gen AI. This was De Kai’s other huge worry.

“I am very, very worried about Generative AI amplifying fears and polarizing us,” he said. “In this respect. I think Generative AI is even more dangerous than the search recommendation in social media engines that have been making decisions about what to show us, and what not to show us.”

Take the example of someone asking ChatGPT-4 whether climate change was a hoax perpetrated by the Chinese. De Kai said the human-like answer of Gen AI plays into a cognitive bias called the fluency heuristic, where we assign much greater credibility to something that is presented fluently. And how much of the half-dozen short paragraphs or so of an answer should be devoted to explaining this hoax is false?

“We're empowering AIs to decide what to censor from our view. What crucial content to omit from what you see,” he said. “But omission of crucial context is also the single biggest enabler of polarization and fear – not falsehoods, not bad actors.”

The Jobs & Economic Disruption Issues

The introduction of every general purpose technology brings a disruption to the current status quo of human jobs because that new technology brings new capabilities that can be assigned to machines, which are cheaper and more efficient, than the old ways previously carried out by humans.

In the long run, humans get reassigned to the next level of more complex tasks beyond the capabilities of that new technology to solve a higher level of problems. This has played out again and again with many, many technologies and all societies eventually adapt to the new realities. Humans never run out of problems to focus human labor on with new kinds of jobs – and we probably never will.

The classic example is the job of a farmer. At the founding of the United States 90 percent of the workforce was farmers. At the turn of the 20th century in 1900 farmers still made up 40 percent of the workforce despite a lot of previous mechanization. And today that number is just 1 percent. Yet today the U.S. unemployment rate is the lowest it has been in 50 years.

In the short run, specific humans with a narrow set of skills can experience the trauma of job displacement when a new technology disrupts their industry. How we handle that transition is a social and political decision that different countries handle differently, said Gary Bolles, the Chair for The Future of Work at Singularity University, and author of The Next Rules of Work.

“Robots and software do not take jobs – they don't – they simply automate tasks,” said Bolles. “It's a human's decision if all of those tasks that are automated add up to a job that goes away– and we can make better decisions. We don't have to decide that that's a discardable human being.”

The big difference today is that the arrival of intelligent machines is moving automation into the realm of the knowledge workers who have been mostly insulated from many of the previous waves of automation around physical work.

“This is a really exciting time for young folks, for old folks, for everyone,” said Ethan Shaotran, the young cofounder of Energize.AI, who moved to San Francisco this summer while still an undergraduate student at Harvard. “One of the most interesting things is that really for the first time in modern history past the industrial revolution and every other technological revolution that's been around, it's not the physical laborers, the farmers, the factory workers who are being displaced, but it's the white collar workers, the knowledge workers, the engineers, lawyers, doctors, teachers, and for a lot of the folks in this room, that's really scary, that's really concerning.”

Bolles pointed out that at these technological junctures the relative power balance between the current workers and the owners or their managers matters a lot in how successful the transition gets carried out. An early example of the trauma ahead is the current strike in Hollywood with the writers and actors demanding new rules on how much the studios can use AI in their productions. It’s not at all clear where this will end up, or where it should end up.

This brings up a related issue of current copyright law. Sometimes these technological junctures blow apart the rationale for legal norms that have settled in over previous decades if not centuries. The arrival of Generative AI might be one of those moments.

Some of these AI models have been trained on essentially all the information on the public internet. When you query something like ChatGPT-4 what you get back is not simply a pointer to a website where humans have created a probable place where you might find the answer to your question. The Gen AI essentially will have read that website, and many others like it, and deliver back to you a synthesis of what that artificial intelligence has learned from all the material out there.

This ability to almost instantaneously synthesize amounts of information far beyond the capability of a single human brain over a lifetime is partly what makes Gen AI so astounding.

However, old school copyright law built up over the decades if not centuries before the arrival of artificial intelligence holds that if you use a human’s legal intellectual property then you must compensate that person for it. That’s how a lot of writers and artists and other creatives earned a living in the old economy.

So how do you reconcile the previous rights of workers who followed the old rules to earn a living in the old economy with the new realities of this new information economy without shutting down these amazing new capabilities? Hollywood is just starting to figure this all out and it ain’t easy.

Before you empathize too much with the old copyright holders or the displaced workers, how about flipping the lens around to the viewpoint of the young Generative AI founders and builders like C.C. Gong, the founder of Montage AI, and cofounder of the “Cerebral Valley” organization that acts as a central hub for many of these new companies in San Francisco.

“What is Cerebral Valley? It's really just a pun, it's not a real thing, but the phenomenon is that Silicon Valley, San Francisco, is reclaiming its throne as the white-hot center of gravity for this AI revolution,” Gong said. “For a while people have been joking that SF is dead. Well, I would say now that Miami is dead, and Austin is dead, because SF is back. It's soooo back.”

She described the palpable excitement in the nearby neighborhoods of San Francisco as young technologists from all over America are quitting their jobs and students from top universities like MIT quitting school to move to ground zero. She said people from all over the world are flying in to attend Gen AI Hackathons and just staying for what they see as the next gold rush.

Yet Gong also said her peers in the many hacker houses and Gen AI startups popping up are very worried about how big tech or giant corporations with huge legal teams will quash the efforts of the more innovative companies.

“I don't think we're thinking so much about the existential risk of AI. We're out here just trying to survive, honestly,” Gong said. “The risks that we're thinking about are: will big enterprise bulldoze us? Is this window of opportunity real? Are we in a bubble? Is anything that we're building going to last?”

They also are worried that fears being fanned in the media will lead to premature regulations that will play to the advantage of incumbent companies and slow down progress.

“As a founder, what we're thinking about in terms of risk implications at the society level-wise, is can we come together in the middle where there are self-regulating standards that we can set up together?,” Gong said. “The last thing we want is heavy-handed regulation that stops progress, and that actively works to take us backward.”

The Rough Regulatory Way Forward

Fiona Ma is the Treasurer of the State of California, and she also is a candidate for Lieutenant Governor, the second-highest office in this state of 40 million, and she is the former Speaker Pro Tempore of the State Assembly, which is the second-highest leader in what is the state’s version of the House of Representatives.

She told our Meeting of the Minds that she knows very little about Generative AI but she came to learn. She went on to say that very few people who are running the state of California, the 4th largest economy of the world, know much about Gen AI either.

“California does not know about AI right now so we're just saying, ‘Hey, if the White House is doing it, we're just going to support it for now,’” Ma said.

To be fair, almost no one in government at the state or national level knows much about Generative AI. And for that matter, few people in business or the media know much either. Even those with a lot of tech experience from Silicon Valley and the San Francisco Bay Area are scrambling to figure out what’s going on in this brand new space. That’s partly the idea behind our Meetings of the Minds at the Shack15 club in the Ferry Building. Even tech experts and leading edge investors want to compare notes and learn from each other at this early stage in the AI revolution.

If previous tech booms are any indication, then government officials in California will be among the first government officials to really understand the pros and cons of Generative AI due to their proximity to ground zero and their access to learn like Ma. So one probable way forward would be for California to start to shape the landscape through relatively early action on some key issues.

Another place to watch are the courts, according to Earl Comstock, the Senior Policy Counsel for the law firm White & Case and a former advisor to the U.S Secretary of Commerce. He said the rules that emerge around new technologies sometimes get set in the courts when plaintiffs claim they were harmed and the courts have to assign liability.

“When somebody gets hurt, we're going to go to the courts and the courts are going to have to wrestle with this,” Comstock said. “And the courts are going to basically apply the traditional laws that they've applied for literally hundreds and hundreds of years. And they're going to, at the end of the day, say you're responsible.”

Looking in the rear-view mirror of court precedent seems to be a very bad way to set the terms for how to make the most of a new futuristic technology while avoiding actual risks. So the optimal way forward is for the national government to create regulations that protect those developing the positive aspects of the new technology from liability.

In fact, Comstock worked for Congress when America faced a similar juncture with the arrival of the internet and set landmark regulations through the Telecommunications Act of 1996 and its famous Section 230 that protected internet providers from liability for the content created by their users. He said we can learn from that “light-touch regulation” that did not throttle innovation but helped unleash it.

“The one good thing I would say is: look, we've been through these revolutions before. It's new, it's novel and people are going to say, nobody's seen this ever before,” said Comstock. “But trust me, we've been down this road before. Let's just avoid the mistakes of the past and try to do it better this time.”

One difference from the 1990s is that we now all live in a globalized world where governing bodies from other regions also are concerned with the risks associated with Generative AI and want to wade into controlling it.

“The European Union views themselves as the Silicon Valley of regulation. I had that said to me three times by three different MEPs when I visited them earlier this month,” said Brian Behlendorf, a self-described Nerd Diplomat for all things Open Source. “Which is a little absurd, but they're very proud of the GDPR (General Data Protection Regulation) and I just have to say, it's adorable.”

“Their view is more of the precautionary principle. If you don't understand a thing, then we have to wait until we figure out what its ramifications are before we can permit it,” he said. “That's going to throttle the European artificial intelligence scene. It's going to throttle the ability to use AI to fight AI harms.”

Behlendorf is the CTO of Linux’s Open Source Security Foundation, is on the board of the Electronic Frontier Foundation and Mozilla, and has been one of the pioneers championing the very successful rise of Open Source software since the 1990s. He thinks many of the lessons learned from Open Source can be applied to Generative AI to help mitigate many of the risks.

He said one of the biggest problems of Gen AI right now is that the vast majority of people outside of a handful of big tech companies have no idea what data the Large Language Models (LLMs) were trained on, or how the AI models actually work, and they have no sense of control over the outputs.

But Behlendorf said Open Source LLMs are fast catching up to the private ones, and the costs of developing personal AIs are coming down quickly, with the long-term trend lines looking even cheaper. He said many of the risks now associated with Gen AI might have tech solutions on the horizon in the form of Open Source software.

“AI by itself as a technology is not comparable to nuclear or bioweapons. It's comparable to any other kind of information technology that has amplified how we work out there,” he said. “And the right approach to addressing those harms, to addressing those concerns, I believe, and I think a lot of others share this, is to equalize access to that AI.”

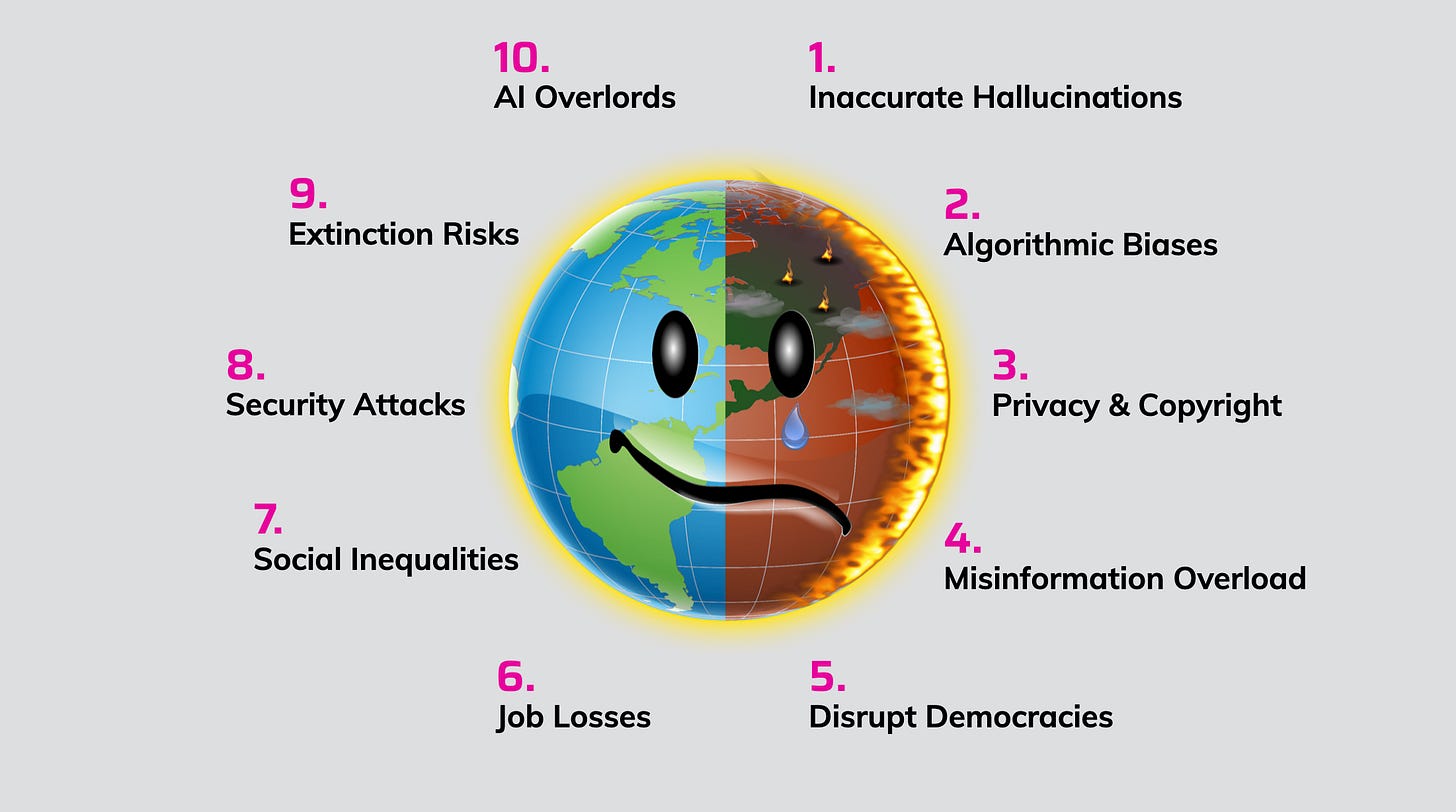

Tabbing up the Pros & Cons

This Meeting of the Minds was explicitly not about figuring out the solutions to the problems of Generative AI. We simply wanted to start to look at as many of the risks as possible and try to identify which ones really mattered, which ones we should start to prioritize, and which ones we could ignore for now. We even had all 250 people in the room show hands on which ones they saw as the most important and urgent.

My quick subjective analysis of that outcome is that the issues of Inaccurate Hallucinations and Algorithmic Biases might be getting a lot of attention right now but that they will largely get solved through more finetuning of the models and reinforcement learning in the next few years. The Privacy & Copyright issues will take government regulations to set new rules through the 2020s.

Misinformation Overload, and to a somewhat lesser extent Disrupt Democracies, were considered problems by more than half the people in the room but they seem to be an amplification of problems around social media that societies already are seriously wrestling with and making progress on.

The Job Losses and Social Inequalities that could be exacerbated by the looming economic disruption did not top the majority’s list but to my mind these problems will really bite first and be among the most difficult to deal with this decade. This is despite the fact that in the long run I think AI will create more and better human jobs, and will help out those on the bottom of societies more than those at the top.

Most people in the room were most concerned by Security Attacks (including against national states, companies, and individuals), and most freaked out by Extinction Risks like those framed by De Kai. I share those concerns and expect that much of the early focus from the Powers That Be and the military in the next few years will try to lower those risks.

Only a relatively few people were worried about the Singularity leading to AI Overlords, and I think that’s about right. We will have decades to figure out how to deal with that ultimate extinction risk if it ever materializes. No need to waste many brain cells on that right now.

Daniel Erasmus, the European Founder of Eramus.AI and one of our dozen speakers, pointed out that the real extinction risk is Climate Change and that problem is already causing catastrophes with many more to come. He reminded us that AI, far from being the problem, might be what we need to solve that problem.

“Our question should be: how can we use these technologies to address the existential threat that we're facing here with climate change?,” Erasmus said. “In which ways can we use this technology to shift our future to a sustainable one?”

Our Meeting of the Minds moved the ball forward, but we could only solve so much in a long evening of conversations with a dozen remarkable people and then 250 innovators in the networking party that followed. You can continue to dive deeper into what we learned through the next piece on The Actual Risks of Generative AI – Extended Quotes, and then The Actual Risks of Generative AI – Video Clips. It’s a good start to a much longer conversation.

I’ll end by saying that even in a night dedicated to focusing on the risks we could not stop people wanting to talk about the solutions as well. Many of the speakers, like Behlendorf or Comstock, could not stop themselves from starting to rough out the possible if not probable solutions.

That’s the way we humans roll. Humans are incredible problem-solvers, and we keep getting better and better, era after era, year after year. We’ve accumulated a vast depth of knowledge, and a mind-boggling array of powerful tools, and have gotten extremely good at innovation.

We might not have all the answers right now to how to deal with all the risks that could arise with Generative AI but the odds are high that we will figure them out in a timely manner just like we have time and time again.

When we first captured fire, we probably got singed a bit, but we soon figured out how to deal with all the risks. When we first discovered electricity, we probably got a shock of two, but we soon figured out how to solve all those problems too.

There’s no reason, given all our accumulated know-how and tools, that we can’t or won’t figure out how to deal with all the risks of AI too.

Let the AI Age begin.

I want to recognize those whose support makes this ambitious project by Reinvent Futures possible, including this essay. Our partners Shack15 club in the Ferry Building in San Francisco and Cerebral Valley, the community of founders and builders in Generative AI. And our sponsors Autodesk, Slalom, Capgemini, and White & Case who help with the resources and the networks that bring it all together. We could not do this without all of them.

Important and insightful Pete. I'm very concerned about the exacerbation of wealth concentration. You can see a steady evolution as tellers were replaced by ATM's, cashiers replaced by automated checkout, skilled manufacturing labor was replaced by factory automation (and of course shifting to low-cost labor with few health/safety and labor protections. Now a significant amount of non-union labor can soon be readily displaced without the necessary changes in economic policy.

As someone who could not make it and feels like a kid with his nose pressed against the glass of this amazing technological revolution, I really appreciate this update.