The Case for Techno-Optimism Around AI

A Meeting of the Minds of many top AI experts at ground zero San Francisco came to a general consensus that much more positive than negative will come from artificial intelligence

You can viscerally feel the techno-optimism back in the air of San Francisco these days as the arrival of Generative AI reenergizes the region. I certainly could viscerally feel that optimism fill a room of more than 250 Artificial Intelligence experts and other prominent members of the tech and innovation world a couple weeks ago on the top floor of the Ferry Building, which is ground zero for the San Francisco Bay Area and also for what is becoming known as Cerebral Valley.

I was in a good position to gauge that sentiment since I was hosting this Meeting of the Minds that my company Reinvent Futures had convened as the kickoff to a new series of events and related media called The AI Age Begins, which will systematically look at how AI could accelerate progress in many different fields. So to start the series we put out the call to help us answer what is becoming a critical question: “What is the case for techno-optimism around AI?”

The arrival of Generative AI and the opening up of AI for everyone roughly a year ago in the form of ChatGPT 4 set off a big bang that’s been reverberating through the world. But most of the initial reaction from the media, the public and increasingly from governments has been negative and even fearful. This doom and gloom narrative did not map onto what we kept hearing on the ground among circles who were closest to understanding this new technology and what it could do. We needed to get those optimists together to clearly make their case for a more positive narrative.

The short answer that emerged in making the case for techno-optimism around AI is that those who are most familiar with this new general purpose technology can better see the full potential of this world-changing new tool. They can see many ways that humans augmented by this super-tool could transform the world for the better and help solve the most pressing challenges of our time like climate change.

“I've been working for almost 40 years in AI and more than 30 years in conversational AI and I am one of those who say: I never thought I would see what's happened in this last year in my lifetime,” said Adam Cheyer, the cofounder of the original startup Siri, which Apple under Steve Jobs bought in 2010 and many of you talk with daily.

“For me, AI is shocking and important. In fact, it may be the most important tool humanity has created,” said Cheyer, whose latest AI startup GamePlanner.ai was recently bought by Airbnb.

The big-picture arguments about the long-term advantages of pushing ahead on AI are buttressed by another line of arguments that AI seems to have many short-term benefits that are rapidly being recognized. We heard this from those working with many of the top companies in the world, those in legacy industries that could stand to lose from a disruption of the old ways of doing business. Yet those same companies, which have the resources to move fast into these new opportunities, are forging ahead.

“We serve the Fortune 500, the Global 2000, and last year I spoke with 150 different companies— the CEOs, executive teams and boards— about Generative AI, said Matt Kropp, the Chief Technology Officer of BCG X, the tech division of Boston Consulting Group, one of the premier strategy firms in the world. “I can tell you in talking to 150 companies, every single one of them is optimistic about this technology. Every single one of them is interested in: how can this help their business? How can this create competitive advantage for them?”

Then we heard from the GenAI entrepreneurs who are taking this new technology and pushing it in thousands of directions to figure out all the beneficial applications that people could use, and would pay for. Much of this activity of probing for positive possibilities occurs behind closed doors and many of these companies are in stealth mode for competitive reasons so the public and the media don’t see what’s coming, like, among many other things, immanent virtual assistants for all.

“I am such an optimist with this technology. I actually think AI is under-hyped, not overhyped,” said May Habib, the Cofounder and CEO of Writer, one of the leading GenAI startups that is helping large enterprise companies make the most of Large Language Models or LLMs. “The truism that technology platform shifts are overestimated in the short term and underestimated in the long run — we definitely see it here.”

To be sure, everyone who spoke to make the case for AI techno-optimism, and probably everyone in that room of 250 innovators, acknowledges that the arrival of such a powerful tool can and will bring risks. Our motivation in convening this event, and holding the ongoing series of future events exploring the positive possibilities of AI, is that there are plenty of other forums focused on the risks and more than enough looks at the possible negative consequences. We wanted to create a space where we can focus on the positive potential, and fully understand what’s possible before jumping into slowing down or constraining the development of AI through early regulation or stirring up public backlash.

Our call to create a more positive forum to understand AI brought together several key networks in the region that includes Silicon Valley. Some came from what I consider the intelligentsia of the tech and innovation economy, particularly from the twin nodes of Stanford and UC Berkeley. Some came from the world of up-and-coming founders and builders of GenAI, partly through the help of our partner Shack15, a new club in the Ferry Building that has held many key hackathons and where we hold our gatherings. And then we had many senior leaders of top companies in tech and global industries, partly through the help of our other partner BCG X, the tech arm of Boston Consulting Group, as well as sponsors that are pioneering AI like Google, Adobe, Writer and B Capital. That critical mass also attracted journalists, politicians and government officials, investors and other innovators and creatives for an amazing conversation that rolled into a party for everyone to cross-connect.

In the end, after hearing a dozen thought leaders make their case for techno-optimism, each of us voted on where we fall on the “Speedometer of Techno-Optimism Around AI,” which you saw to start this essay. We asked how worried or how confident you were in this statement: “AI will make a largely positive impact on the world in the 2020s and we will manage the risks.”

You need to read on to get the final tabulations towards the end of this essay. By that time you will have heard from the many AI experts who we all did that night, with links to their full remarks in highly produced videos in YouTube. You then will be in a position to judge whether you are “seriously worried” and think we need to slow down development, or “fully confident” and think we should all move full speed ahead.

The Really Big Picture on Our Really Important Moment in History

I began this event and so this series of events by laying down some big-picture assumptions that we will build off and not constantly debate. First, the arrival of Generative AI and the opening up of AI to everyone will be seen over time as a world historic moment and the true beginning of the Age of AI, hence the name of the series The AI Age Begins. This is a very big deal.

Historians of technology generally categorize about 25 general purpose technologies as truly world-changing, from the wheel to the printing press to electricity. Artificial intelligence will certainly be considered part of that pantheon of technologies that gave humans a step change in our capabilities and fundamentally changed the way we did things forever after. That’s a big enough deal, but there’s more.

Artificial intelligence is opening up a whole new category of human augmentation that we have never been able to do until now. From the earliest days of homo sapiens roaming around the savannah starting about 300,000 years ago until The Enlightenment about 300 years ago, human augmentation was limited to harnessing the physical powers of animals at best. Then the invention of the mechanical steam engine dramatically augmented our physical powers with the power of 200 horses and over time exponentially more.

For all of human history any task that required intelligence required a human brain to carry that task out. That meant if you had to sense the world around you, reason about a changing situation, and make a series of judgments and a final decision - you then needed a human to do it. Until now. We now have intelligent machines to augment our mental powers. All the tasks that require intelligence and that were once reserved for humans can now be reconsidered in this new light. And those tasks of intelligence span an immense range of pretty much all industries and fields over time. This is a very, very big deal.

The speed of this transition to this new age of intelligent machines is going to move faster than what we have become used to with the arrival of previous new general purpose technologies too. That’s because all the previous ones have required a long period to build out the new infrastructure required to make the new tool actually work. The most recent example that we all have lived through is the arrival of the Internet. The comparable moment of first opening up the internet to everyone came in the 1990s but it took another 25 years to build out the fiber optic lines, and wireless networks, and expand the bandwidth to accommodate video, among many other things, to finally get more than half the people on the planet connected and able to really use the capability that the internet provides.

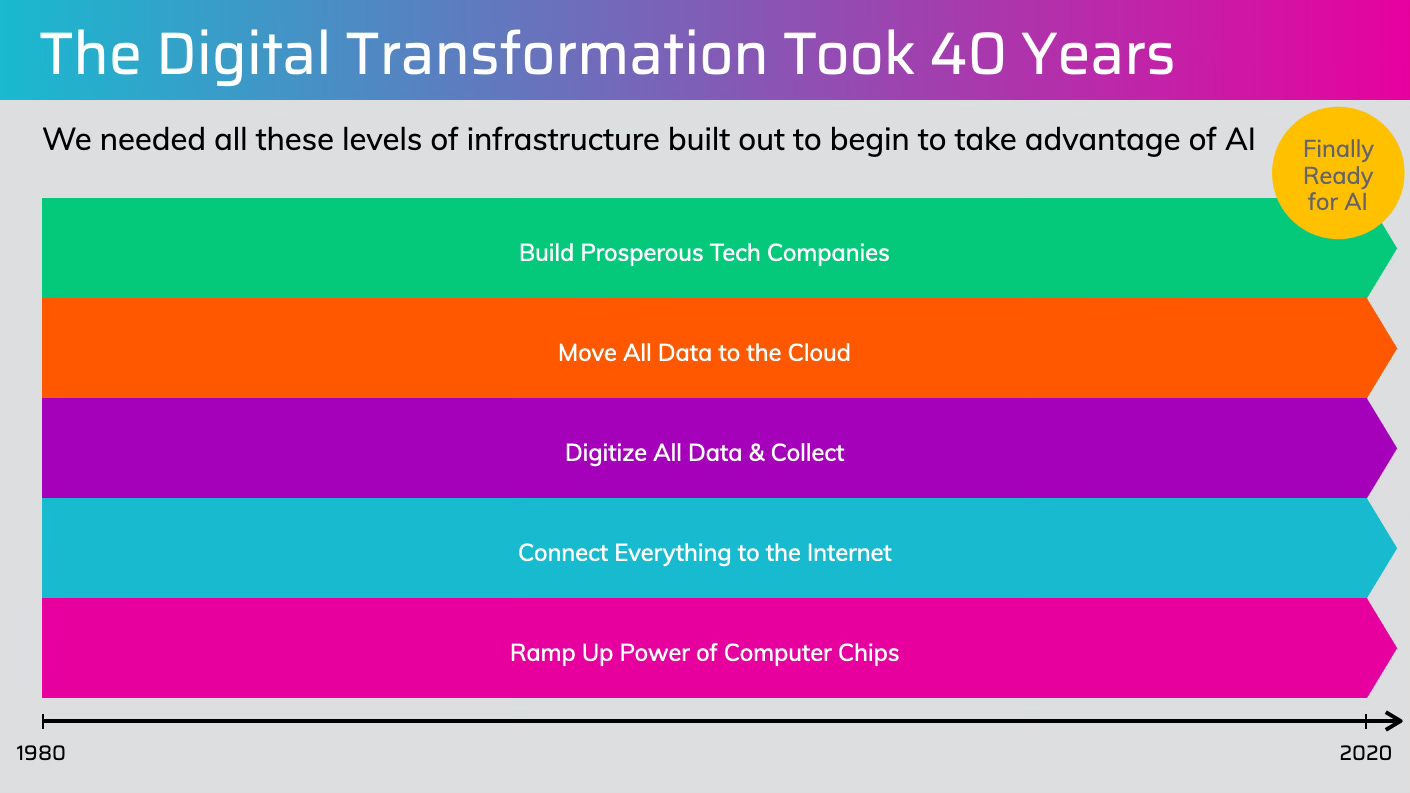

The arrival of AI requires no such infrastructure build out. A better way to understand the situation is that AI is the crowning achievement of what has been a monumental global infrastructure buildout for the last 40 years in the form of what we now call The Digital Transformation. The adjacent graphic crystalizes the infrastructure layers that had to be laid over time. We had to exponentially ramp up the power of computer chips through the dogged process of Moore’s Law doubling in power every two years. We had to connect everyone and eventually everything to the internet along the lines I just laid out. We then had to turn all information into digital form, starting from pretty much none of it digital 40 years ago. Then we needed all that data accessible and interconnected through the cloud. And then I would argue, in the absence of enough government research investment, we needed very prosperous tech companies with the resources to invest many billions of dollars into AI research for more that a decade with no return to get the breakthroughs in neural networks and LLMs and transformers and the like.

All that hard work and incremental infrastructure buildout of the last 40 years is now done. Very little additional infrastructure is needed for AI beyond making even more powerful chips, and building even more data centers, and producing more energy to power the computations. AI as of now can now take off in all directions. If most people are not thinking big enough about the implications of AI, then they are not moving fast enough either.

The final assumption we make in this series is that AI is like all previous general purpose technologies that first arrive in the world. The technology arrives unknown and neutral to start. No one really knows what you can do with it, for good or for bad. All general purpose technologies that are successful then begin a process of figuring out all the benefits, as well as thinking through all the risks.

Humans seem to have a default setting that makes them initially look at the risk. I suppose that comes from being the most recent of a long line of our species that emerged from a world when a rustle in the bushes probably did mean risk. Our ancestors who immediately freaked out at the rustle and ran like hell became our genetic germ line. Those who shrugged at the rustle at some point became a meal for a lion and their optimistic genetic line died out. Whatever the reason, even modern humans seem to initially worry about new developments and resist change.

This certainly seems to be the case with the arrival of AI. I think it’s fair to say that the dominant narrative in public conversations about artificial intelligence in the last year has been largely negative. The media seems to be primarily focused on the potential problems and the risks, and the more sensational the better. There seems to be an inordinate amount of attention on how AI will grow to a super intelligence that will rule over humans if not kill us off altogether. Almost all the AI experts I know consider this a ridiculous worry for at least many decades if not ever.

All general purpose technologies probably had some version of that initial fear of the risks. But then they all go through a process of entrepreneurs and many other people figuring out all the positive things that can be done. Electricity arrived in the scene and skeptics rightly pointed out that if you touch electrical wires you can die. But many other people started figuring out that electrify could light our homes, and then our streets, and then power useful household tools like washing machines.

Soon the benefits of electricity were seen as clearly worth dealing with the risks. And then we humans applied a lot of ingenuity to mitigating the risks while maximizing the benefits. We moved from a risk/benefit ratio of say 40/60 to 30/70 over time and finally we got to 20/80 where the risks of electricity are totally manageable and so that general purpose technology is considered totally safe. We have institutionalized systems (like electrical codes and inspections) that have fully de-risked that general purpose technology and now we don’t even think about it.

AI is at the very beginning of that process. We don’t know all the benefits that could come out of this technology because we’re just wrapping our heads around what it can do. We haven’t even begun to think through how AI could now be used to solve many of the great challenges that have stymied us up until now. Could AI be the transformative tool that we really need to finally and fully solve climate change? Could AI be what we need to fundamentally reinvent personalized health care for the 21st century?

The AI Age Begins series of events is out to create that consistent space to think through those key questions and figure out the many benefits that may come. We want to connect up entrepreneurs and a wide range of other innovators to help envision the full potential of this new general purpose technology. Then this more positive narrative (among others out there) can be contrasted with the prevailing negative ones to give a more balanced sense of what we all should do.

The Overarching Case for Techno-Optimism Via the Experts

From the earliest days of thinking about artificial intelligence after the arrival of mainframe computers, two general schools of thought emerged. One envisioned AI as eventually operating autonomous from humans, and the other thought of AI as primarily augmenting humans. The foundational thinker most closely associated with the augmented school was Doug Engelbart, who was a key mentor to Adam Cheyer, the Cofounder of Siri.

Cheyer (click through to watch his full video, like with the others to follow) made his case for AI by focusing on how AI already is augmenting humans with capabilities that we simply could not do without it. He mentioned the recent example of AI solving a set of math problems that human mathematicians had been trying to solve for the last 50 years. Or how Google’s DeepMind AI had been able to predict the folding of proteins an order of magnitude faster than humans have been able to do. This is only the beginning of such augmentation that almost certainly will get better and better, while the world keeps struggling with wicked problems like climate change.

“There are risks with AI: Job displacement, ethics and bias, the energy consumption. But then you look at the existential risks of the world — hunger, poverty, water, energy. If you then look back at AI, you're like: AI has some risks, but it also can have a huge ability to help us solve all of the other problems,” Cheyer said. ”If I'm going to cure cancer, I want AI to help me."

“So overall, when we look at the net positive to minus, I'm absolutely a tech optimist, he said. “This will help us solve many of the problems that we couldn't do without it.”

Let’s stay at the macro level and consider the economic implications of widely distributed Generative AI. Many economists have started speculating on the possible boosts to productivity, particularly for knowledge workers in developed economies. Early looks at early adopters like software coders have often shown a doubling of what they can do in a day— if not more. This has been raising hopes that the overall productivity rates of developed economies might be able to rise substantially after stagnating since the last rise during the internet boom of the 1990s and early 2000s. If the core productivity rates rise, then that almost certainly would convert into higher economic growth rates for those advanced economies beyond the norms of 2 percent we have become used to after the post-war boom of the 1950s and 1960s.

We asked for some thoughts from Brad DeLong, a prominent economic historian from UC Berkeley and author of Slouching Towards Utopia, a history of the 20th century through the lens of technology and economics. He gave 5 reasons for optimism (which you can see through the above link), but his first one stood out: AI finally will give us the means to reach the Holy Grail of mass production in being able to cost effectively personalize exactly what individuals want.

“The transformation from the world of the bureaucracy that fits everything and everyone into a Procrustean bed to the world of the algorithm which gives us not Peter Drucker's mass production or Bob Reich's flexible customization but rather bespoke creation for nearly everything,” DeLong said.

“The difference between the thing that is sort of what you want and exactly what you want is immense for user value and that's been the Holy Grail that mass production and its successors have failed to find. We are going to find it.”

Much of that productivity will come from the next wave of Generative AI in the form of autonomous agents acting for each of us out in the world. Peter Schwartz, the Chief Futures Officer for Salesforce, and a close advisor to CEO Marc Benioff, expects that each of us will soon have a very capable virtual assistant, or probably a range of specialized agents that can do many of the tedious tasks that bog us down at work or at home.

“All of the friction in life that we now experience, whether it's ordering things online or doing research or carrying out medical tasks, all begin to disappear into the background,” Schwartz said. “Most of the downsides of Generative AI will be gone within a couple of years and we will all have AI assistants that enable us to carry out all the tasks we want with minimal friction.”

To be sure, we don't have those agents up and working yet, though some rough prototypes are emerging and many startups and the tech giants are getting close. Harrison Chase, the Cofounder and CEO of LangChain, one of the better-known of the new crop of GenAI startups, particularly in the open source world, said of AI that one key issue to figure out is memory—not the technical memory of computers but the ability to remember everything about the individual person over time. We should soon be there.

The Positive Impacts Already on the Business World Today

Even now we are seeing clear signs of the beginning of the productivity boosts for those who use AI. Like all good strategy consultants, Matt Kropp, the CTO of BCG X, had actual numbers to reference based on a scientific study that BCG did with Harvard, Wharton, and MIT. They took 750 BCG consultants and split them into two groups, one able to use ChatGPT-4, the other not. Those armed with AI finished their tasks 25 percent faster, and did 15 percent more work. Those are solid numbers that would make a quantitative difference over time.

The surprising parts of the study were that the quality of the output was 40 percent better for those using AI. That’s a seriously impressive shift. Plus the consultants who were known as the bottom performers, but were able to use ChatGPT-4 for the study, performed as well as those who were known as the top performers.

”So what that means is your new people—every company has new people at the bottom—those people can perform as well as the experienced people. It's going to completely change the way that we think about skilling and talent,” Kropp said.

Those numbers coincide with what May Habib, the CEO of Writer, is seeing in their work with a wide range of companies involving hundreds of thousands of employees. Writer works with established enterprise companies to build the full stack of technologies needed to get the GenAI LLMs connected to a company’s data and workflows. Once they get that up and running, the knowledge workers within those firms are able to quickly accelerate their work processes.

“Never before has it been so fast to go from having an idea to having a working prototype,” she said. “This is not like solving cancer type of stuff. It's wealth managers who now write proposals for their clients in an hour or two instead of a day or two. It's brand managers who can ship campaigns in days, not weeks and months, but really shortening that road from idea and ideation to execution and reality is really connecting people to their work in much more profound ways.”

“That's a really exciting future because most of the technology waves that we have ever had resulted in knowledge workers working more, not less,” May said. “The average highest paid knowledge worker is working close to 60 hours a week now. And I think we finally have a technology that can actually reverse that. So we can spend time doing the things that make us really uniquely human.”

The canary in the coal mine that could detect a positive or negative impact from GenAI early would have to be the creative industries like those that involve writing or image creation or even movies. The earliest examples of what GenAI could do often involved those capabilities and so initially freaked out content creators in those industries. Witness the strike in Hollywood last year coming from those writers and actors in the film industry partly worried about the looming impact of AI on their jobs.

However, another way to think about the impact of GenAI on creativity is to see it as democratizing. Work that used to require a professional elite like those who claw their way to the top of Hollywood, and that required studios that could marshal hundreds of millions of dollars to produce one movie now can be done by a wider range of people for dramatically less cost.

Ken Reisman leads Digital Media Enterprise at Adobe, which provides the foundational suite of tools for many professionals involved in creative design like film editing and graphics. Anyone who has used Photoshop or Premiere Pro knows Adobe—which adds up to a lot of creatives. Adobe is also charging into the GenAI world with their own product called Firefly. Reisman said professionals using their AI products are finding that many common tasks can now be done 10 times faster if not more. And most of those tasks are drudgery so they are happy to leave those tasks behind.

“I feel like I'm living in the future now. Just seeing what's in the lab at Adobe, GenAI will profoundly affect human creativity. Really ushering in a new era of creative expression,” said Reisman. “That sounds like hyperbole. I don't think it is. I think it is really going to democratize creative expression. Just expanding the range of people who can express themselves creatively by making it incredibly easy, virtually effortless to create images, videos, really any form of media.”

Bilawal Sidhu is one of those creatives who works on his own as an independent and has amassed huge followings in social media for his videos. He’s become so well known that he was asked to present on the main stage of the annual TED conference in 2023. He talked about the possibilities in the near future for someone like him to create up to 100 times what he has been able to do so far using laborious traditional methods.

“It is democratizing the ability for people to do virtual production, essentially bringing the stuff James Cameron only dreamt of in the 2000s to indie creators like me,” Sidhu said, adding that there can be downsides with these new tools too. “At the same time, it is democratizing the ability to surveil and build detailed biometric profiles about people at large.”

Back Looking Towards the Future and What May Lie Ahead

Remember this is only the very, very beginning of The AI Age. What these tools can do now is only a taste of what they will be able to do over time. This is like someone 30 years ago trying to get onto the internet through some squeaky 240 baud modem over a telephone line and then trying to envision what it would take to stream a 4K movie over a handheld mobile phone that didn’t exist yet.

So let’s close the case for techno-optimism around AI by looking a bit farther out on what might be possible. In our general conversation with the 250 innovators in the meeting, Kim Polese, the Cofounder of CrowdSmart.ai, brought the conversation back to the long-term possibilities of future generations of AI. She said the federal government’s DARPA considers us now in a second wave of AI and anticipates that the next generation of AI will be more of a fusion of machine and human intelligence.

“Third Wave AI is when we actually integrate human intelligence,” she said. “When we actually build AI systems that are self-learning, self-supervising, adaptive, that use agent-based technology as we heard a little about today. And those systems actually can use AI to help facilitate, like the best human facilitators, our collective ability to problem solve.”

“What can we build with those kinds of systems?,” she said. “We can build new kinds of social engagement tools that instead of optimizing for outrage, optimizing for common ground. We can build systems that help organizations be nimble, adaptive, find alignment quickly. We can build systems that help us achieve breakthroughs.”

One obvious front in need of breakthroughs or needing help aligning humans against a common challenge is climate change. We heard from Kate Gordon, former Senior Advisor to the Energy Secretary at the US Department of Energy, on three areas most ripe for help from GenAI. All of them involved helping blast through the logjams of information piling up in the bureaucracies of federal, state and local governments as well as utilities that are slowing down America’s ability to scale up clean energies.

We won’t go into those details now because our next Meeting of the Minds on April 24th will focus exclusively on the question: “How can AI accelerate progress in clean energy and climate tech?” Our essay on what we learn then will be able to go in depth into AI’s impact on that field.

California State Senator Josh Becker, one of only 30 senators in this state of 40 million people, who represents part of Silicon Valley, took in the whole event before giving his take. Becker gets AI since he was the founder of a Lex Machina, an early AI company that focused on creating value from legal documents and cases that human lawyers were unable to digest because of the sheer scale of information.

Many governments throughout America and the world are gearing up for regulation of this transformative new technology. Europe has already established some early guidelines that are causing consternation among those building AI, who mostly reside in America, and the West Coast, if not here in San Francisco. California seems like the best positioned governmental body to set the terms of any regulation. That’s certainly the feeling of many AI technologists.

Becker said California is already considering 35 bills that have been introduced concerning Artificial Intelligence, including a handful that he has authored. Like most politicians representing a wide range of constituents, he maintains a balanced approach to the many issues surrounding AI. That said, he made his case for optimism as well.

“I'll say the last case for optimism, and I thought about it today, when I see all these great uses, I actually think that in the world of AI that being human can be more important than ever,” Becker said. “The human connection will be valued more than ever before.”

One final analogy was made by Chris Anderson, who ran WIRED magazine as Editor-in-Chief for a decade in the Web 2.0 era, and then founded and ran a drone company, before becoming CTO of Larry’s Page’s Kittyhawk. He reminded us that in the early days of the Human Genome Project, a portion of the public budget was set aside to talk about the ethics of cracking the genetic code of humans. Many conferences were held to imagine what could go wrong, but in the end the technologists just went ahead and did it. And only when technologists tried to work with genes did we understand the actual issues and risks.

“So I think what's going to happen is that we're going to talk a lot about what could happen with AI, and we're going to go do it and what goes wrong—we'll fix it,” Anderson said. “And if it turned out that what we're worried about was not the right thing to worry about, then we won't worry about it anymore, and we’ll just course correct as we go.”

“You're here at ground zero as we're running through the experiment, but I would say there's basically nothing like doing to learn,” he said. “Ultimately, in this room with this group of people, we will do it and next year when we come back, we'll figure out what worked, what didn't work, and we'll fix the bits that didn't work.

Sorting out the Techno-Optimism in the Room and in San Francisco

Which brings us back to our rough poll on gauging the level of techno-optimism in the room that night. Granted, this was an invite-only crowd of innovators involved in GenAI, or more broadly the tech and innovation economy of the region, plus many intellectuals from academia or other thought leaders. This was not your average man or woman on the street.

A further caveat is that the poll was far from scientific since we only were able to see a show of hands of the more than 250 who maxed out the room. (You can try to parse out the number of hands in the video of the exercise too.) But I promised at the beginning of this essay to give you some sense of how people in that room reacted to this statement: “AI will make a largely positive impact on the world in the 2020s and we will manage the risks.”

Seriously Worried: Just a handful of people raised their hands to this first option so we can set that group aside.

Mostly Worried: Maybe twice as many people voted for this one, so two handfuls rather than one. Still, a minimal showing.

Feel Generally Good: This was probably the biggest group in the end, all things considered. I would say a bare plurality and far from an absolute majority.

Mostly Confident: This was the second biggest group, pretty close to the number in the generally good category.

Fully confident: This was a significant segment of the whole group, for sure the third biggest, and bigger than the first two worried categories combined.

The combined effects of all 5 categories weighs the sentiment of the room clearly into the green zone of confidence that thinks the positives of AI will outweigh the negatives and we will be able to manage the risks in the 2020s.

What do you think?

Peter rocks

Excellent work. I look forward to continuing to support this.