The Actual Risks of Generative AI - Extended Quotes

Go deep into the insights of a dozen Gen AI experts who helped clarify what risks we actually may face as well as point out responsible ways forward

I started my career as a journalist and have learned over the years that the best way to understand something new is to find the pioneers who are grappling with that new thing early and just talk. I often would record those conversations and transcribe them so that I could really study what they were saying and let the insights sink in.

So I wanted to preserve that approach to learning in launching The Great Progression series of events in San Francisco and series of media in Substack. We at Reinvent Futures have gone through a lot of effort gathering a dozen top innovators and experts to give short 5 minute talks in each of our events on the key questions surrounding Generative AI.

The 250 or so innovators invited to the physical event in San Francisco should not be the only ones to learn from the myriad insights of our experts in any of our events, including the most recent one. So I synthesized some of the key takeaways that we learned that you can now find in The Actual Risks of Generative AI essay. But a lot of terrific material from the transcript ended up on the cutting room floor.

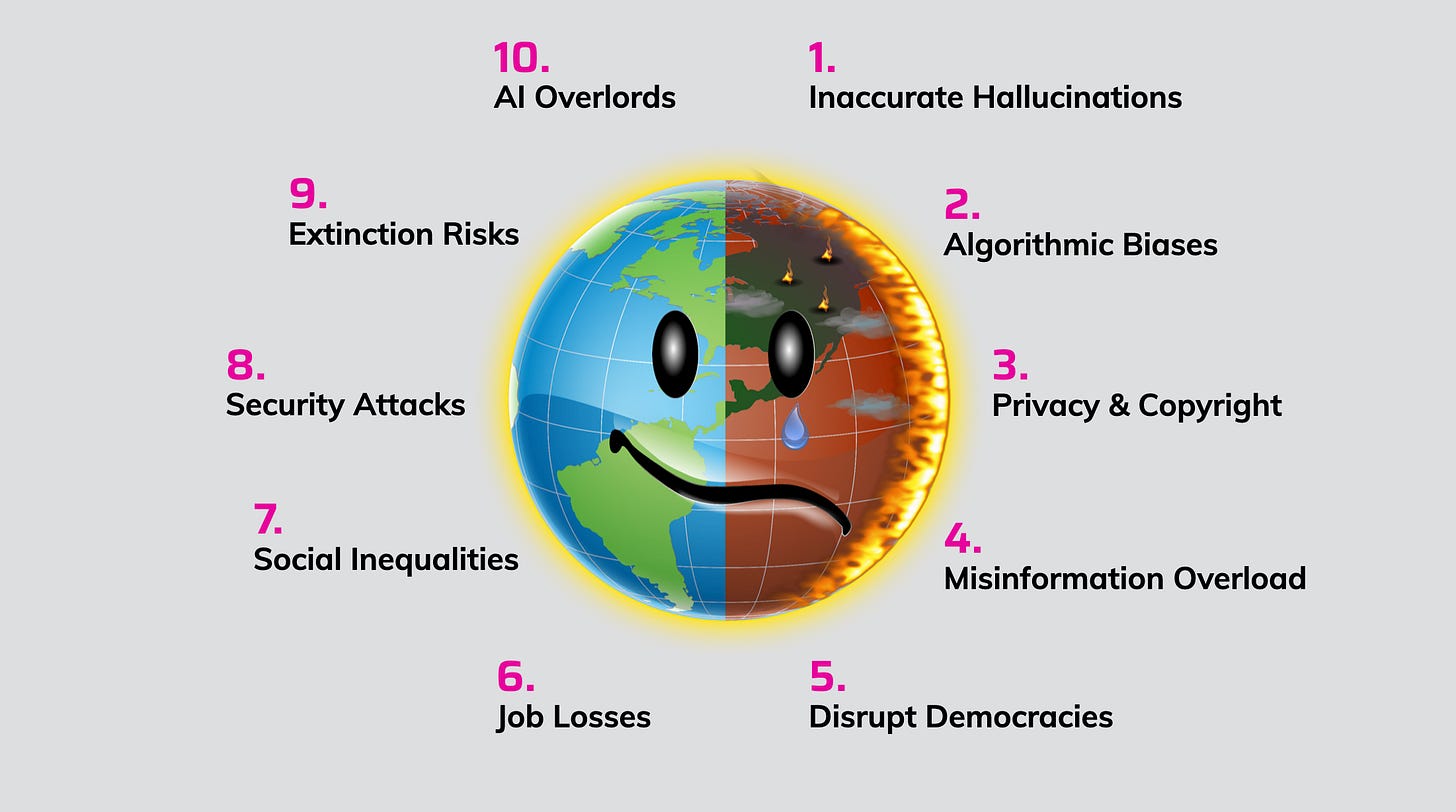

So I have pulled together some of the best passages and lay them out below. They roughly touch on all the risks in the graphic above. A few of the quotes can also be found in my essay, but mostly this is new material in their own words with no spin from me. I learned a lot from these passages, so maybe you will too.

Jerry Kaplan, Inventor of tablet computer, Cofounder of one of first AI Companies, Author of new book: Generative AI: Everything You Need to Know:

I've never been a big booster about AI, but I think we need to face something here and admit that Generative AI, GAI, is Artificial General Intelligence. We are there. We have achieved this, or we're about to cross this boundary.

Is it general? Come on. Have you guys played with any of these systems? We're talking about anything. And as soon as we hook it up to the outside world and actions, it's going to be astounding what it can possibly accomplish.

Is it intelligent? You have to read my book to go through the analysis of this, but the simple answer is yeah, it's highly intelligent. It's real intelligence, and it's really general, and it's really artificial. So as far as I'm concerned, that should be it for today. This is AGI, Artificial General Intelligence.

Now in the book I look at various major inventions in history and compare their impacts and they include the wheel, the printing press, photography, light bulbs, airplanes, computers, and the internet. And the truth is, Generative AI is going to be much more important than any of these inventions, and I'm quite convinced of that.

It might be the most important human invention ever. And part of the reason I say that is that we have created a tool that can use tools, and that's a very fundamental difference and we've crossed that threshold now.

…

I would say that probably even 10 years from now, if you want to get the most objective and comprehensive advice or information on any subject, you're going to consult a machine, not a human being.

I'm a little on the older side. Didn't start out that way. And I'm genuinely, seriously grateful to have lived to see this moment. I had never expected to see this in my life.

…

Now super intelligence and the singularity, I'm sure many of you have heard about this. I'm going to briefly explain why it's complete nonsense.

They are not coming for us. There is no They. That's not what this is about. We're building tools. These systems have no desires and no intentions of their own. They're not interested in taking over. They never will be.

They're not drinking all of our fine wine and buying all the beachfront property and marrying our children. That's not what's going to be happening.

De Kai, AI Prof at ICSI (UC Berk) & HKUST (HK), Machine Translation Pioneer, on Google’s Inaugural AI Ethics Council:

I am very, very worried about Generative AI amplifying fears and polarizing us. In this respect I think Generative AI is even more dangerous than the search recommendation in social media engines that have been making decisions about what to show us, and what not to show us.

When I first took up my professorship several decades ago, after my PhD at Berkeley, writing one of the first thesis to argue for probabilistic statistical and neural network approaches to tackling natural language interpretation, the reason that I decided to apply that brave new world of machine learning to pioneering web translation AIs, was to help reduce polarization.

Pretty much everything in my life has been toward reducing polarization between different cultures and subcultures. When I foresaw, nearly a decade ago, the dangerous polarizing effects of the same technologies that we helped pioneer in search recommendation, in social media AIs, I started speaking widely about the issues.

…

What makes the situation super-dangerous is that this polarization and fear-mongering is happening at the same time today that AI is also democratizing weapons of mass destruction.

Until now, humanity has managed to survive WMDs only because they've been limited to a handful of highly-resourced nation states. But now we're perilously close to the days when AI's enabling not just arsenals of lethal autonomous weapons, but also criminals, terrorists, rebels, terrorists, even disgruntled individuals who can practically head down to the Walmart or Home Depot, and pick up everything they need to 3D-print meshed fleets of voice and facial recognition targeted drones, armed with anything from projectiles to CRISPR-engineered bioweapons.

World War I was triggered by the assassination of a single archduke. Would you take a bet that not a single person is going to push their launch button? It's a terrible bet. The question is, what would cause someone to push that launch button? Well, loss of hope, overpowering fear, irreconcilable polarization.

…

We still don't talk about the critical point that AIs are not just deciding what we see. They're also deciding what we don't see — dozens of times a day.

Whenever you Google something, or search YouTube, or Amazon makes recommendations, or you check Instagram or Twitter, AIs are deciding for you not only which 50 items that you are going to see today, they're also deciding for you which trillions of items you're not seeing today.

We're empowering AIs to decide what to censor from our view. What crucial content to omit from what you see. Omission of crucial context is very profitable for the companies that operate these AIs. But omission of crucial context is also the single biggest enabler of polarization and fear— not falsehoods, not bad actors.

Now you think this is a problem with traditional AIs. Generative AI is worse, because Generative AI doesn't give you clumsy lists of items. It synthesizes them into a neat several-sentence paragraph. That triggers a huge cognitive bias called the fluency heuristic, where we assign much greater credibility to something that is presented fluently.

C.C. Gong, Founder of Montage AI, Cofounder Cerebral Valley organization, & a former White House Innovation Fellow:

What is Cerebral Valley? It's really just a pun, it's not a real thing, but the phenomenon is that Silicon Valley, San Francisco, is reclaiming its throne as the white-hot center of gravity for this AI revolution. For a while people have been joking that SF is dead. Well, I would say now that Miami is dead, and Austin is dead, because SF is back. It's soooo back.

…

We've been organizing these events, and not just us, there's dozens and dozens of organizations like us, who organize events, and hackathons, and discussions, and salons and dinners. On any night of the week, there's at least 10 huge events, hosted by incredible speakers, incredible organizations. There's at least a hackathon a week, with hundreds of folks coming in from all around the world.

I've met people from all over the world. There's someone from Botswana, there's someone from Germany. I've met people from every continent at these hackathons, and I've actually talked to many of them who come for a weekend, and end up just staying, because they see the energy here and they see that if you're not here in person, it's almost like you're at a disadvantage in this revolution.

…

One interesting thing I've seen is the rise of non-technical founders in this AI space. I worked on AI at Meta and Microsoft, and it always felt like machine learning AI was a field for very technical folks.

I judged a hackathon a couple of weeks ago with David Sacks, and the CEO of Replit, and there was a non-technical track. What we saw is that the non- technical founders ended up creating projects that were more complex than the technical founders.

This is opening up space for so many more people to build, and to ideate and to create. That's also led to this gold rush feeling where everyone's coming to see what they can create. It feels like the beginning of an era.

…

I think, as a founder, what we're thinking about in terms of the risk implications on a society level-wise level, is can we come together in the middle where there are self-regulating standards that we can set up together?

If you saw this week, Microsoft OpenAI, Google and Anthropic got together and created the Frontier Model Forum, and that is a great step forward, where there is collaboration between parties who actually know what's going on and understand it, forming these regulations in conjunction.

Because the last thing we want is heavy-handed regulation that stops progress, and that actively works to take us backward.

Eli Chen, Cofounder of Credo AI, applying human oversight to AI, veteran engineer of Netflix & Twitter:

I consider myself a software engineer first and foremost. So, I really see this as an engineering problem. To give you a little bit of background, I was in my previous startup working on reverse engineering neural networks, and pulling out what concepts have been learned in these neural networks so that they could be used for de-biasing and debugging these neural networks.

What has been quite surprising is we weren't done with that work yet with ChatGPT and all the recent work with LLMs, we're now at the stage of general purpose AI. It's not a neural AI anymore, it's really general purpose.

And what has happened is that this technology has suddenly enabled the usage of AI beyond machine learning builders and machine learning teams into anybody, in not just the corporate world, but also consumer world.

…

There's still a lot of knowledge that needs to be gained about how LLMs and Generative AI works. We would benefit from a lot of transparency into how these systems work and where the limitations are, such as where the biases are, where the data was trained at.

I encourage us all to ask for these transparencies from these providers of these AI systems, whether we're building it ourselves or using it from large providers. I think that's a starting point to engineer the controls to mitigate these risks.

R “Ray” Wang, Founder of Constellation Research, Venture Partner with Mindful VC:

I wrote a book called Everybody Wants to Rule the World and it's really about the digital divide that was coming up. And in that digital divide, the big companies got bigger and the smaller companies were thrown out. And this was pre-COVID, pre-Gen AI.

And with Gen AI what we actually have is a situation where if you want AI to succeed, you need lots of data, lots of compute power, you need large networks of information and only the large might win.

And so we're in a situation where there's a lot of social inequity that's actually going to occur. Big companies are going to get bigger, startups are going to be dependent on these larger companies and their networks.

It's going to be hard to get access to GPUs and TPUs in the future and governments are going to have more control over individuals. And I'm a little bit worried about that, are you?

…

The cyber attacks are getting better… We need AI to go after AI and our bots are going to be fighting other bots.

During COVID that was the beginning of the first set of AI attacks. There are a lot of things I can't talk about, many of you in this room can't talk about, but everybody had a good offense, nobody had a good defense.

And it was brutal, the types of attacks that were happening. And that was just the first generation. Those were tests to figure out what the future was going to look like. Those were tests that were going to see what future cyber attacks are going to be.

And so we're basically in a world where all these Generative AI models are going to create new types of attacks and go after each other.

Fiona Ma, Treasurer of State of California, Candidate for Lt. Gov., past Speaker Pro Tempore of Assembly:

California is unique. We like to lead the nation and the world in terms of legislation, regulations, pushing the envelope, and so I'm going to talk a little bit about that.

Right now in our state legislature, there is a bill SCR 17 by Senator Bill Dodd. That's a Senate concurrent resolution and basically it’s trying to just promote an issue. And this bill commits the legislature to examining and implementing the principles outlined in the blueprint for an AI Bill of Rights published in October, 2022 by the White House Office of Science and Technology Policy.

California does not know about AI right now so we're just saying, "Hey, if the White House is doing it, we're just going to support it for now."

…

I would just challenge all of you to reach out to whoever you know, your friends, to people in politics, people who are consulting us, and tell us what is true and what could be the worst that can come.

I know for all of us in politics, we want to better the world. We want to use AI to better healthcare disparities, to level the playing field in education, to improve traffic, and even maybe tackle insurance fraud.

What we need to know as elected officials is how AI and Generative AI is going to improve our society and how to take away the fear that we are hearing from folks.

Ethan Shaotran, Cofounder of Energize.AI, Harvard student, winner of OpenAI’s Democratic AI Grant:

Let's talk about how AI learns. It's something called reinforcement learning. That is a very similar process to how we humans learn today, which is that as students and children, we learn what's right and what's wrong, how to solve a problem correctly versus incorrectly. And from that, our parents and teachers give us a reward, either a positive or a negative reward based on how we did.

That same dynamic and process is how we train AI today. We give them a positive signal when they do well and they solve a problem correctly, and we give them a negative signal whenever they're biased or racist.

That's a really important dynamic because right now AI is still just a teenager. It's still learning how to be a better doctor or engineer.

The way we go forward and make this a safer technology is having all of us, everyone in this room and everyone around the world, collectively using their opinions and their preferences and their knowledge to contribute, and teach AI how to be a better person in today's world.

That means that for the future of jobs, what we'll all be doing in five or 10 years is that increasingly we're going to be working with AI in your job and everyone's job, not just as a tool but we're also going to be ourselves teaching it when it's doing a good job, when it's doing a bad job.

And all that data will teach it how to become the best doctor, the best engineer, the best lawyer, the best global citizen of this new future world.

Gary Bolles, Chair for the Future of Work at Singularity University, author of The Next Rules of Work:

Robots and software do not take jobs — they don't — they simply automate tasks. It's a human's decision if all of those tasks that are automated add up to a job that goes away and we can make better decisions. We don't have to decide that that's a discardable human being.

If half the innovators in the room decided to build business models that could actually try to help humans to be able to continually adapt to the pace and scale of change, to be able to solve the problems of tomorrow, and their business models required them to have that positive impact in the world, then all of the things I'm trying to depress you about around jobs are less likely to happen.

Right now we have almost 10 million open jobs in the United States and yet we have 5.6% unemployment. And so, there's a mismatch. There's humans that could be doing work and we could be helping them to be able to solve those problems. And instead, what we're doing is we're spending a ton of time saying we envision all those jobs going away.

And that's not what history so far has told us nor is it a law of nature. It's not an inevitable outcome.

…

Think of people who work and people who pay for work as being on ends of the table constantly negotiating, constantly co-creating. And what happens when that table tilts is it gets out of whack.

So the reason that 330,000 UPS workers have a contract and half as many SAG-AFTRA and WGA workers don't have a contract is because the table is tilted differently.

The UPS workers had more balance and more power, and the SAG-AFTRA workers have the dependency on business models that are much more extractive.

Daniel Erasmus, European Founder of Erasmus AI, which has been operating for 18 years:

We're now in the epicenter of a place that calls itself the center of an information technology revolution. But revolutions are nasty, scary things. The information technology revolution we had in Europe with the printing press resulted in massive changes across the entire Europe, and across the whole world.

…

There is something else happening in the world that's more existential than what we have discussed with AI. There's another disruption. By 2050, we're expecting 200 million climate refugees. The latest reports put that at a billion by 2060.

That's 27 years from now. The US barely manages to deal with 25,000 today. Europe, the second-largest political project in the world, barely survived one and a half million refugees during the Syrian war.

What does that mean for institutions? We should be looking out. Our question should be, how can we use these AI technologies to address the existential threat of climate change that we're facing here? In what ways can we use this technology to shift our future to a sustainable one?

Dazza Greenwood, Exec Dir of MIT Computational Law Report, Task Force on Responsible Use of Gen AI in Law:

I think that the most important real risk, not necessarily the most exotic potential risk, that I'm coming across with respect to the use of Generative AI in the field of law, is it's almost too good. It's seductively good. And yet it's hardly perfect. It has flaws. It has limits. It hallucinates, it has biases.

The main thing I want to talk about is what's automation bias and the risk that humans might get too dependent on AI? I'm a heavy user of this technology to assist in all parts of the sequence of production, refinement and other tasks. It is very tempting, after many hours, and many days, and many weeks, to just copy and paste something that, at a glance, looks good enough. Yet, this is the road to ruin.

The thing with automation bias is it's not just a bad look when something's subpar and gets into the work stream, but it could have serious consequences in certain areas. There's a lot of automation happening already in cockpits, in emergency rooms, in decision support. Now with the advent of Generative AI, it's happening more broadly.

We need now to start to develop a better immune system in general, and fundamentally with respect to Generative AI, to think critically, to review the outputs, to ask the hard questions, and never to just simply accept them, but to take them as a starting point. We need to now build up an entire new set of muscles and practices, where we know how to review, and never over-rely upon the output.

I'm going to say this hidden beast of a risk is so subtle, and it's so seductive — to fall backwards into the warm bath of just using this technology. But don't do it. Don't over-rely — rely on just the right amount.

Earl Comstock, Senior Policy Counsel for White & Case, former Senior Advisor to U.S. Secretary of Commerce:

A lot of what you can do with AI depends on your access to infrastructure, it depends on your access to data pools. And so an inequality that exists today can be either exacerbated or taken down.

Guess who owns the big data pools? It's the same big companies. Now, those companies all are trying to do the best in their interests. And the challenge for the startups is how do you get recognized and how do you align yourself or educate the regulators when there's something different between your interests and the big company's interests?

The one good thing I would say is, look, we've been through these revolutions before. It's new, it's novel and people are going to say, nobody's seen this ever before. But trust me, if you go back through history, we've been through numerous industrial revolutions, the information revolution, all of these things, and we've somehow managed to muddle through.

…

And the key point I would make as somebody who's been both on Capitol Hill and in the Executive Branch is that the struggle for the regulator is figuring out how do I motivate behavior? And what they're going to end up doing is looking at liability.

Because at the end of the day when somebody gets hurt, we're going to go to the courts and the courts are going to have to wrestle with this. And the courts are going to basically apply the traditional laws that they've applied for literally hundreds and hundreds of years.

And they're going to, at the end of the day say: you're responsible. The question is, who is you? It may be the AI developer, it may be the middleman, it may be the person who decided to use it.

But as you go through this, depending on how you're using that tool, think about your liability, think about what you need to do to address that liability. Because when it gets into court, people are going to say, what did you do? Did you ignore this risk? Did you think about it? Did you take reasonable steps?

…

Then when you get to the regulation side, Congress or the government will assign that liability to somebody. The regulation could be light touch or it could be heavy touch, and it could be right on or it could be right off.

It's not just Congress, it's the agency that then is tasked with implementing the broader language that Congress wrote. The tech community needs to come together and not hide stuff from them but actually educate them. You have to tell them what is actually going on.

Brian Behlendorf, Linux’s Open Source Security Foundation CTO, Board of Electronic Frontier Foundation:

Open source software today, by numerous different studies, is consumed by about 98% of the software products that are out there. An average app, a phone, a car, websites you go and visit. And in fact, 70% to 90% of the lines of code within those objects tend to be pre-existing open source code.

Open source is in this 30 year trajectory. In 1998 was when it was defined, but really we were building the internet using open source code before that. The open source movement has come from the rebel outsider image, and “let's keep the internet free and fair,” to becoming the new establishment.

…

The good news is because of this vibrancy of the open source ecosystem around AI, it's gotten cheaper to build models. The trend line for that is much faster than Moore's Law.

In fact, the Stanford LLaMA model apparently costs about $600 to train something that's at the level of GPT-3. Now, that's a claim they've made. I know there's debate, but this stuff is getting better faster.

Also thanks to open source code, we've moved away from needing all of the data there at once to build one big model and towards a more modular approach where you can pick up a pre-existing foundational model, add to it other models that you've built.

So this kind of combinatorics of models gives us a bunch of options and gives us a bunch of efficiency we didn't have before.

And the big picture here is it allows you to start to think about not just making API calls into OpenAI, but building a startup based on building your own models, working from the training sets, benefiting from this rich open source ecosystem, and having more agency as a startup or as a mid-size company.

If we see the trend lines continue in a few years, the idea of personal AIs starts to get much more pragmatic.

…

AI by itself as a technology is not comparable to nuclear or bioweapons. It's comparable to any other kind of information technology that has amplified how we work out there.

And the right approach to addressing those harms, to addressing those concerns, I believe, and I think a lot of others share this, is to equalize access to that AI.

Open source software is a pathway to do that. Collective action by the developers working on collective models and building upon each other's work is the right way to do that.

…

The European Union views themselves as the Silicon Valley of regulation. I had that said three times by three different MEPs when I visited them earlier this month. Which is a little absurd, but they're very proud of the GDPR and I just have to say, it's adorable.

Their view is more of the precautionary principle. If you don't understand a thing, then we have to wait until we figure out what its ramifications are before we can permit it. That's going to throttle the European artificial intelligence scene. It's going to throttle the ability to use AI to fight AI harms.

…

The Chinese are a little bit at the opposite extreme. And don't think of China as a monolith. The interests of the government, even that is two or three different groups.

They're hoping somebody hits the pause button in the West so that they can move farther faster. They see the same trend lines and are less worried about the lack of access to Nvidia chips than others.

The thing to think though is the Chinese can also be allies of ours in the fight against AI harms. While there are things we might not like with China, it's not very easy to try to keep an AI model from outputting the word “democracy.” It's going to be a lot harder, frankly, to keep these tools from actually being the tools of liberation if we agree to work with the Chinese on this AI stuff.

Even though there's big risks out there, I'm really eager to use technology to fight those risks and I hope all the rest of you are too.

I want to recognize those whose support makes this ambitious project by Reinvent Futures possible, including these extended quotes. Our partners Shack15 club in the Ferry Building in San Francisco and Cerebral Valley, the community of founders and builders in Generative AI. And our sponsors Autodesk, Slalom, Capgemini, and White & Case who help with the resources and the networks that bring it all together. We could not do this without all of them.

It’s helpful to have a review of the entire program. Thanks for taking the time to put this together and to publish it.

Thanks for sharing these sort of details.